Chatbot Agent 🧠

Objectives ✅

- Use different message types - HumanMessage and AIMessage.

- Maintain a full conversation history using both message types.

- Create a sophisticated conversation loop

from typing import TypedDict, List

from langchain_core.messages import HumanMessage

from langchain_ollama import OllamaLLM

from langgraph.graph import StateGraph, START, END

class AgentState(TypedDict):

messages: List[Union[HumanMessage, AIMessage]]

ollama_model = "llama3.1:8b"

llm = OllamaLLM(temperature=0.8, model=ollama_model)Without Memory (History)

from IPython.display import Markdown, display

def process(state: AgentState) -> AgentState:

# Extract the content from the last HumanMessage

prompt = state["messages"][-1].content

response = llm(prompt)

state["messages"].append(AIMessage(content=response))

return state

def start_node(state: AgentState) -> AgentState:

prompt = "Hello! Who are you?"

response = llm(prompt)

state["messages"].append(AIMessage(content=response))

display(Markdown(f"AI: \n{state['messages'][-1].content}\n"))

return state

def end_node(state: AgentState) -> AgentState:

state["messages"].append(AIMessage(content="Rate limit of 3 messages reached. Please try again later."))

display(Markdown(f"AI: \n{state['messages'][-1].content}\n"))

return state

def take_user_input(state: AgentState) -> AgentState:

user_input = input("You: ")

state["messages"].append(HumanMessage(content=user_input))

print("HUMAN:", state["messages"][-1].content)

return state

def should_continue(state: AgentState) -> AgentState:

"""Decide whether to continue looping based on the counter."""

display(Markdown(f"AI: \n{state['messages'][-1].content}\n"))

if len(state["messages"]) < 6:

return "loop"

else:

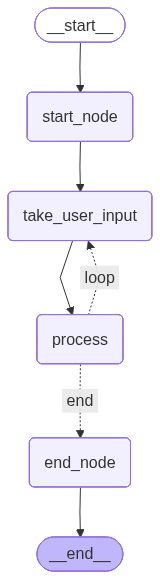

return "end"graph = StateGraph(AgentState)

graph.add_node("start_node", start_node)

graph.add_node("take_user_input", take_user_input)

graph.add_node("process", process)

graph.add_node("end_node", end_node)

graph.add_edge(START, "start_node")

graph.add_edge("start_node", "take_user_input")

graph.add_edge("take_user_input", "process")

graph.add_conditional_edges(

"process",

should_continue,

{

# Edge: Node

"loop": "take_user_input",

"end": "end_node",

},

)

graph.add_edge("end_node", END)

app = graph.compile()

OUTPUT:

AI: "I'm an artificial intelligence model known as Llama. Llama stands for Large Language Model Meta AI."

HUMAN: Rasputin

AI:"The infamous Grigori Yefimovich Rasputin! A figure shrouded in mystery and controversy, associated with the downfall of the Russian monarchy. Here's a brief overview:

Early Life:

Born around 1869 in Siberia, Russia, Rasputin was born into a peasant family. His early life is somewhat of a myth, but it's believed he was raised in an Orthodox Christian household and developed a strong ...."

HUMAN: What about the song ?

AI: "I don't think we were discussing a specific song. This conversation just started. Would you like to talk about a particular song or ask for recommendations? I'm here to help!"

HUMAN: I forgot you do not have memory XD

AI: "That's a clever reference to the fact that I'm a large language model, I don't have personal memories or experiences like humans do. Each time you interact with me, it's a new conversation and I start from a blank slate. But I'll play along - how can I help you today?"

AI: "Rate limit of 3 messages reached. Please try again later."

"With ConversationSummaryMemory (LangChain Conversational Memory)

ollama_model = "llama3.1:8b"

llm = OllamaLLM(temperature=0.8, model=ollama_model)

memory = ConversationSummaryMemory(llm=llm)

memory.clear()

conversation = ConversationChain(

llm=llm,

memory=memory,

)ollama_model = "llama3.1:8b"

llm = OllamaLLM(temperature=0.8, model=ollama_model)

memory = ConversationSummaryMemory(llm=llm)

memory.clear()

conversation = ConversationChain(

llm=llm,

memory=memory,

)def process(state: AgentState) -> AgentState:

# Extract the content from the last HumanMessage

prompt = state["messages"][-1].content

response = conversation.predict(input=prompt)

state["messages"].append(AIMessage(content=response))

return state

def start_node(state: AgentState) -> AgentState:

prompt = "Hello! Who are you?"

response = conversation.predict(input=prompt)

state["messages"].append(AIMessage(content=response))

display(Markdown(f"AI: \n{state['messages'][-1].content}\n"))

return stateOUTPUT:

HUMAN: Hi!

AI: "I'm an artificial intelligence model known as Llama. Llama stands for Large Language Model Meta AI."

HUMAN: Rasputin

AI:"The infamous Grigori Yefimovich Rasputin! A figure shrouded in mystery and controversy, associated with the downfall of the Russian monarchy. Here's a brief overview:

Early Life:

Born around 1869 in Siberia, Russia, Rasputin was born into a peasant family. His early life is somewhat of a myth, but it's believed he was raised in an Orthodox Christian household and developed a strong ...."

HUMAN: What about the song ?

AI: "AI: The song! I think I'm starting to get a sense of where this might be going. I've been trained on a vast collection of music-related texts, including lyrics and discographies from various artists. I believe there's a classic rock song called "Rasputin" by Boney M, released in 1978. The song tells the story of Grigori Rasputin, a Russian mystic who gained significant influence over the Romanov dynasty before his eventual assassination in 1916. Is that the context you were thinking of?"

AI: "Rate limit of 3 messages reached. Please try again later."

"